Blue Books Will Not Save Us

There are more harms caused by AI in heaven and earth than are dreamt of in your philosophy

As an active participant in the debate around AI use in education, I wanted to very briefly touch on something I’ve seen often as a proposed solution to what many are calling the “crisis” we face today. This solution is simple: RETVRN to blue books!

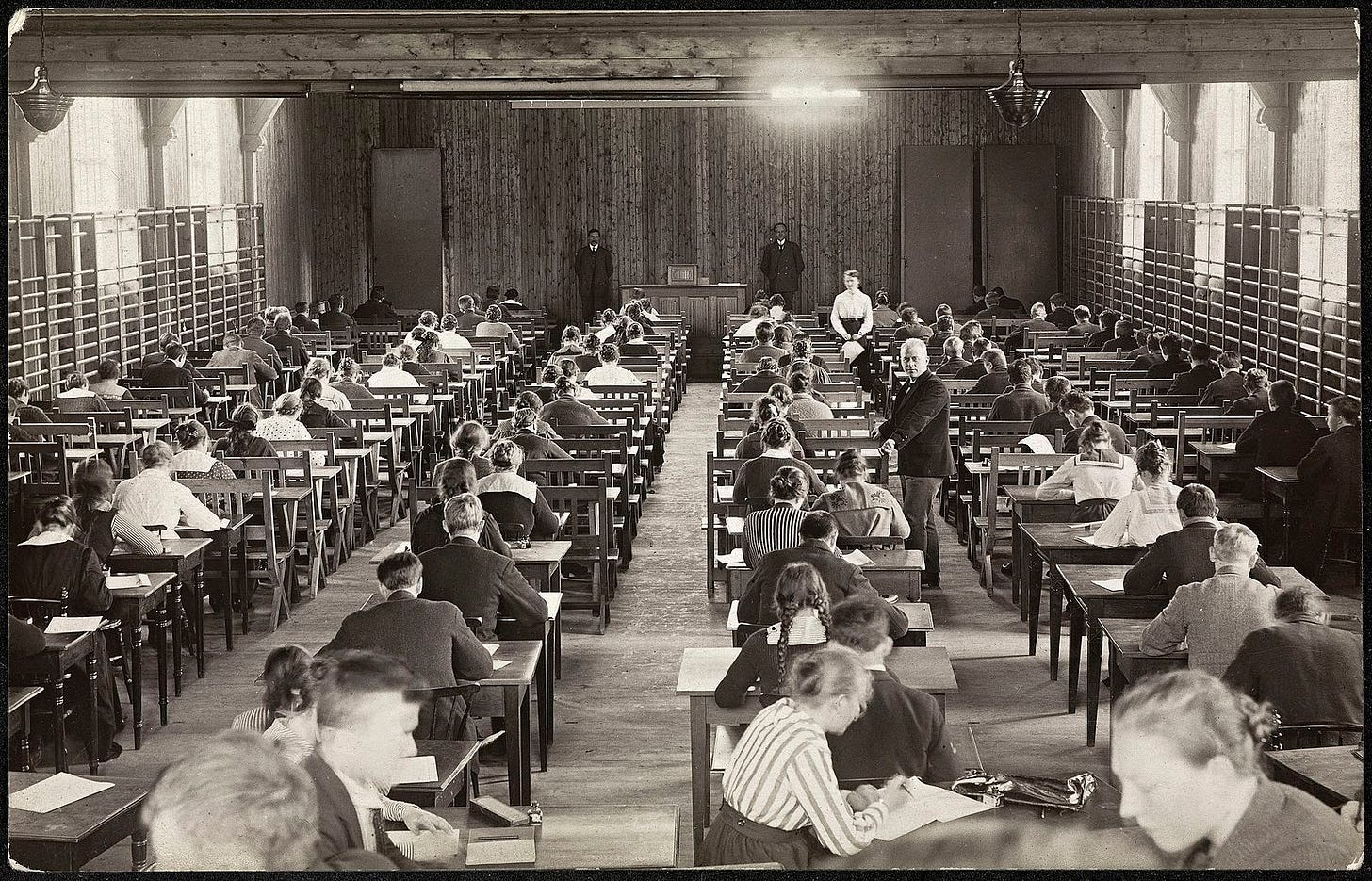

If you never used blue books in your education, you’re missing out. These small, inexpensive devices have created decades of consternation for students across the world. Relatively inexpensive and insignificant, these books collect a dozen sheets of lined notebook paper and are designed to accompany an exam sheet which instructs you what sort of thing you ought to write in the book. A blue book exam requires you to use your own hand, your own brain, your own latent intellectual resources to answer a variety of questions, which will then be graded by your professor or course teaching assistant (TA),(who are given the unenviable task of deciphering your handwriting.

My undergraduate exam-taking consisted almost entirely of blue book exams, and I have been assigning them in my own courses or grading them in courses for which I was serving as a TA since 2017. I am thus not unfamiliar with these blue books as pedagogical tools, and in fact I am quite fond of them. So I was somewhat surprised that when I began complaining about AI in higher ed, many people echoed the same sentiments, namely: just assign blue books, and all your problems will be solved! Many seemed to assume that if I was struggling with student use of AI in my courses, I must be failing to avail myself of this simple resource available to any instructor with the initiative and the time for grading handwritten material.

While I appreciate the sentiment and will defend blue books to my dying breath, they are not the silver bullet solutions some have made them out to be. This is for two primary reasons: the first is the loss of other pedagogical tools, and the second is the harms to students at the hands of AI outside the classroom.

First, what tools are we losing to AI that blue books cannot replace? I would argue that the traditional essay retains its usefulness as an educative tool, if students engage with it correctly. I remember fondly many nights spent in libraries alongside other students in my same courses, perusing scholarly sources, taking extensive notes, and attempting to power through an appropriate word count with a sufficiently coherent argument. Writing, revising receiving feedback on, and further revising a piece of writing cannot be replicated by a timed, in-class exam.

In short, the process of writing a paper outside of class cannot simply be replicated in a blue book exam, and something serious is lost if we give up entirely on the traditional essay, whether those essays are more analytic, argumentative, or research-based. While I would love to trust my students to perform these out-of-class assignments, the truth is that in a world with increasingly sophisticated generative AI, I have to believe that a good number of my students will be using generative AI in some form on any assignment I give them that requires outside-of-class work.

Second, what harms are students suffering at the hands of generative AI that cannot be cured by simply giving them blue book exams? While it may be true that my blue book exams are harder to cheat on by nature, and thus might encourage more serious study and reflection on the part of the students. The unfortunate reality is that generative AI has infected all in-and-outside of the class student activities. When professors assign readings, students frequently use AI to summarize those readings rather than reading them. When professors provide study guides, students will frequently use AI to fill out those example questions rather than attempting them themselves.

This leads to some very amusing quirks even for those of us who use blue book exams. I recall one exam I gave before leaving my previous institution. In this class, I gave students a study guide with a set of questions that might appear on the exam, and then provided some choice on the exam itself. I noticed a strange phenomenon, where a set of students all made a very obvious error on their essays that someone who had done the readings and studied independently likely would not have made. The similarity of the error and the specificity of it suggested to me that either a set of students all studied together and made the same error or else, what I consider to be more likely, has used generative AI to answer my essay prompt, and had roughly memorized the output of the AI software and replicated it on the exam. In such a scenario, students are shortcutting every step of the educative use of readings, study guides, and exams. If the use of these tools as a substitute for one’s own reason is as harmful as I have argued elsewhere that it is (and if these tools are as harmful as I intend argue at length from historical sources in my pending book project that they are), then a couple of outlying exas that require a bit more brainpower will not be sufficient to counterbalance the harms we are all so concerned about.

Again, I love blue books. I use them in all of my courses. I intend to keep using them, and I agree that they solve the most immediate problem of generative AI, namely stopping students from cheating on a singular assignment in a singular course. I can know with relative reliability that students are not using Chat GPT to handwrite the essays for the blue books I hand them in class, and in today’s world, that is a boon to me. But even using blue books for all of my tests does not stop the general trend of mental degradation at the hands of generative AI. There is a kind of collective action problem, first, where universities as a whole are unwilling to shift back to unaccommodated, handwritten exams. But there is also a kind of teaspoon-against-the-tide problem, where even if a whole host of professors at a whole host of universities attempt to bully our students into intellectual rigor, they will nevertheless be victims and willing participants in degrading uses of technology outside of our class that we are powerless to stop. So, while I would never discourage the use of blue books, I would caution those who offer simple solutions that the real solutions to our problems are never quite so simple as they appear.

Agreed that blue books will not save us. Your thoughts are very similar to mine. https://joshbrake.substack.com/p/blue-books-and-oral-exams-are-not-the-answer

My core thesis is that the only truly effective way to address genAI is to get at the root of student motivation. As you say, it’s not that assignments like take home essays don’t have educational value. They do! But they must be done properly to be of value. We ought to be spending our time making the case for why the productive struggle is worthwhile, rather than trying to find stopgap solutions.

This is spot on! Nevertheless I think that mitigation is a good first step. Blue books and also oral examination are the two best tools we currently have at our disposal. They are NOT a solution to the problem, that’s correct, but slowing down the onslaught is a good first step as we try to figure out a way to truly turn the tide.